How sustainable are Generative AI models?

This blog post outlines why large language models, thus generative AI models need so much power to be trained, deployed and maintained. We also look why some solutions are more sustainable compared to others.

What impacts the carbon footprint of large language models?

There are three main values that impact the carbon footprint of llms like GPT-4:

1) The footprint of the training model

2) The footprint from inference. "Inference" in large language models is referring to the capability of llms predicting outcomes using new input data. The models essentially use past knowledge to make educated guesses about the meaning of new sentences or to predict what comes next in a conversation. It's like connecting the dots using what they've learned from reading lots of text.

3) The footprint needed to produce all the required hardware and capabilities of the cloud data center.

Most energy-intensive is the trainint part of such models. Importantly, larger models do use more energy during their deployment. However, this study shows that inference is also a major consumer of energy, with up to 90% of the ML workloads is due to inference processing.

How high are the energy-costs of a large language models used in generative AI?

We summarized some important facts for you to shed lights on the environmental impact of large language models:

- The Megatron Turing model from NVIDIA needed hundreds of NVIDIA DGX A100 multi-GPU servers, each using up to ~ 6.5 kilowatts of power (1).

- To train one BERT model (LLM by Google) is roughly the same amount of energy and carbon footprint as a trans-Atlantic flight (2).

- Researchers from the paper "On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜" explain that training models such as OpenAI's GPT-4 or PaML from Google uses 300 tons of CO2 (= one average person uses 5 tons a year) as outlined by HBR.

What is a sustainable large language model strategy?

If possible, use existing, pre-trained models from llm providers such as Microsoft OpenAI, Google (bard, PaLM), Meta AI (LlaMA) and do not create your own generative models (sidemark: this also costs around $ 100 Mio. according to a recently published article on HuggingFace. You should - instead - still fine-tune a model for your use case.

What are Best-practices to build greener llms?

- Besides pre-trained model usage, use small models (with less parameters) by removing unnecessary parameters (so-called "pruning") without jeopardizing the accuracy you need (e.g. your model should still answer with a good confidence)

- Reduce training time by experimenting with a distilled version of BERT, DistilBERT. "Distilled models are also been shown to be more energy efficient" as outlined in sustainable AI in the cloud.

- Fine-tune your model with own data for some period instead of training it from scratch

- Use cloud-based environments which provide scalable infrastructure (Azure, AWS, Google)

- There is specialized hardware that support training speed (Graphcore, Habana) and inference (Google TPU, AWS Inferentia)

- Other technical tricks: merge model layers (so-called "fusion"), store model parameters in smaller values (say, 8 bits instead of 32 bits, so-called "quantization")

- Run ML models on small, low-powered edge devices without need to send the data to the server to process). Use for example TinyML.

- Monitor carbon footprint via tooling such as CodeCarbon, Green algorithms, and ML CO2 Impact.

- Encourage your data science team to set benchmarking standards and include sustainability in their model considerations

- Finally, think if you really need Generative AI at all. If you need help we can support you by evaluating your use case.

Summary about sustainable generative AI

Please find a comparison between llms and their sizes in the previous blog. Additional material can be found here:

- The Greensoftware Foundation sets standards.

- This article by Accenture outlines additional facts.

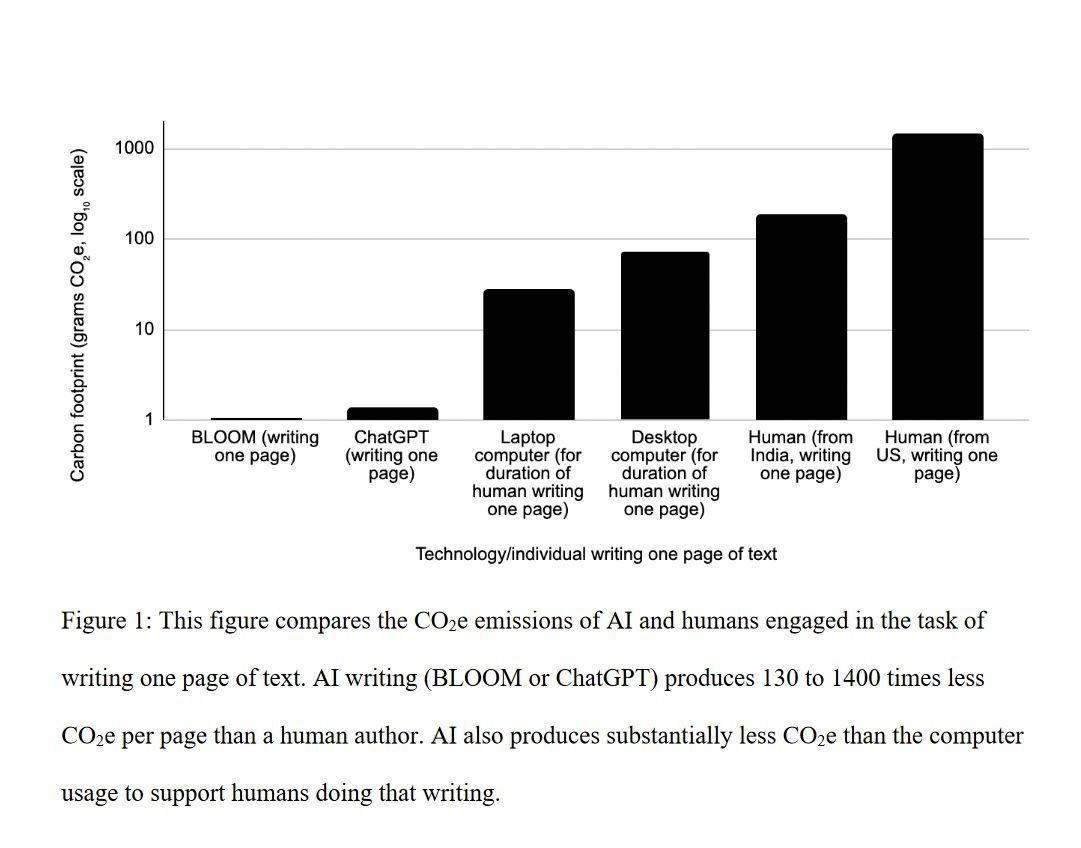

Responsible AI is a crucial foundation for all generative AI products. It is important that we consider carbon footprint with AI models to protect our earth and future. However, some studies also outline the difference between AI generated content vs. human generated content when it comes to emissions. This paper can be found here.

Need support with your Generative AI Strategy and Implementation?

🚀 AI Strategy, business and tech support

🚀 ChatGPT, Generative AI & Conversational AI (Chatbot)

🚀 Support with AI product development

🚀 AI Tools and Automation