Special Offer valid until May 18: ChatGPT + General AI & Image Generation, only CHF 390.- instead of CHF 590.- Register today

Are GPTs Relevant for my AI Strategy?

This article gives you a comprehensive overview of implementation strategies when adopting Generative AI.

Are GPTs relevant for my Company's AI Strategy?

MyGPTs or GPTs are customized "ChatGPTs" (learn more about GPTs and alternatives in our last blog post) offered by OpenAI for your own purpose. They can be also offered on an OpenAI GPT store for other users. That means, you can build your own GPT for your specific domain. the newly published GPT store in ChatGPT on 10.01.2024.

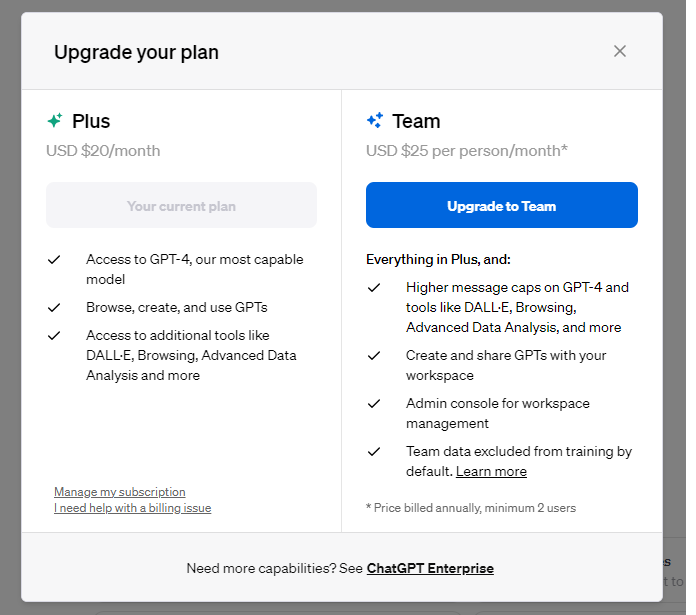

With 3m GPTs in January 2023 available this is a crucial distribution channel for companies. In addition, OpenAI launched the Team collaboration option (costing 25$ / month) focussing on small medium enterprises to collaborate easier within ChatGPT.

We have made a research around companies and in the GPT store to see whether companies see GPTs as a major part of their AI investment areas. Our outcome: Most GPTs are still created by single creators or as an extension of tool providers.

But why do businesses still avoid building their own GPT in March 2024? GPTs have main drawbacks such as the inflexibility to control Prompt Engineering and RAG processes to provide better quality based on internal data. Furthermore, companies are bound to the ecosystem of ChatGPT. This means, to use the company's GPT users need to have a ChatGPT Plus account. Builders who wish to share their GPTs in the store need to follow specific guidelines and ensure their creations comply with OpenAI's usage policies.

Three Strategies How Companies Can Make Use of GPTs

1) GPTs as prototypes: Most corporates implement GPTs as a playground or "trial and error" sandbox to develop prototypes for clients or their own future generative AI applications.

2) Community and AI branding presence: Companies see it as a community enabler and growth option to be present in the GPT Store from day one.

3) Companies use it as internal support tool:

There are companies that train important internal FAQs in order to use their GPTs as cost-effective internal support assistants for client support. This sounds like a funny use case, however, we've seen it across our market research.

What is the OpenAI Enterprise Teams Version?

If you decide to use GPTs as part of your Ai tool set, you can consider various options: There is an Enterprise Version of ChatGPT which makes sense when teams want to collaborate. Most companies in the US seem to use this option. It is also comparable to the Claude Team version from Anthropic.

ChatGPT Enterprise Team version for SMEs to collaborate and create own "GPTs".

More on what GPTs are and how to build them.

Need support with your Generative Ai Strategy and Implementation?

🚀 AI Strategy, business and tech support

🚀 ChatGPT, Generative AI & Conversational AI (Chatbot)

🚀 Support with AI product development

🚀 AI Tools and Automation

Call: + 78 874 04 08

talk(at)voicetechhub.com

Etzbergstrasse 37, 8405 Winterthur

©VOOCE GmbH 2019 - 2024 - All rights reserved.