Special Offer valid until May 18: ChatGPT + General AI & Image Generation, only CHF 390.- instead of CHF 590.- Register today

Alternatives to OpenAI: Testing Bard vs. Claude vs. ChatGPT

In this blog you will learn about the alternatives to ChatGPT and OpenAI.

Where is Bard better than ChatGPT?

Bard is the response to OpenAI's ChatGPT. What makes Bard so different to OpenAI?

- It is free! So you can try it out here whereas ChatGPT costs $20 per month.

- Another advantage is the microphone on the desktop version to directly speak in your question and get a response.

- Bard has internet access whereas ChatGPT you need to jump from one service (Web Browsing) to the other

- Bard covers far more languages (265 as of October 2023)

- Some drawbacks: it is not able to generate pictures. With ChatGPT DALL E-3 you can generate pictures. Bard only offers you a nice description.

Where is Claude better than ChatGPT?

Claude is the version of ChatGPT developed by the company Anthropic.

- This tool is currently accessible only in the UK and US, and not yet available in Switzerland. You might consider using Nord VPN to explore its functionality in your country.

- Claude has one big advantage to ChatGPT: It can process more "context" (Generative AI from A to Z), meaning the input token (100 token equals around 75 words) can be up to 100'000 tokens (75'000 words!). GPT-3 has a limit of 4096 tokens (3072 words) and GPT-4 of 8192 tokens (= 6000 words). So when you want to upload huge files, use Claude.

Source: Bard has a better microphone option, but cannot generate pictures compared to ChatGPT Plus

What is Poe and what are its advantages?

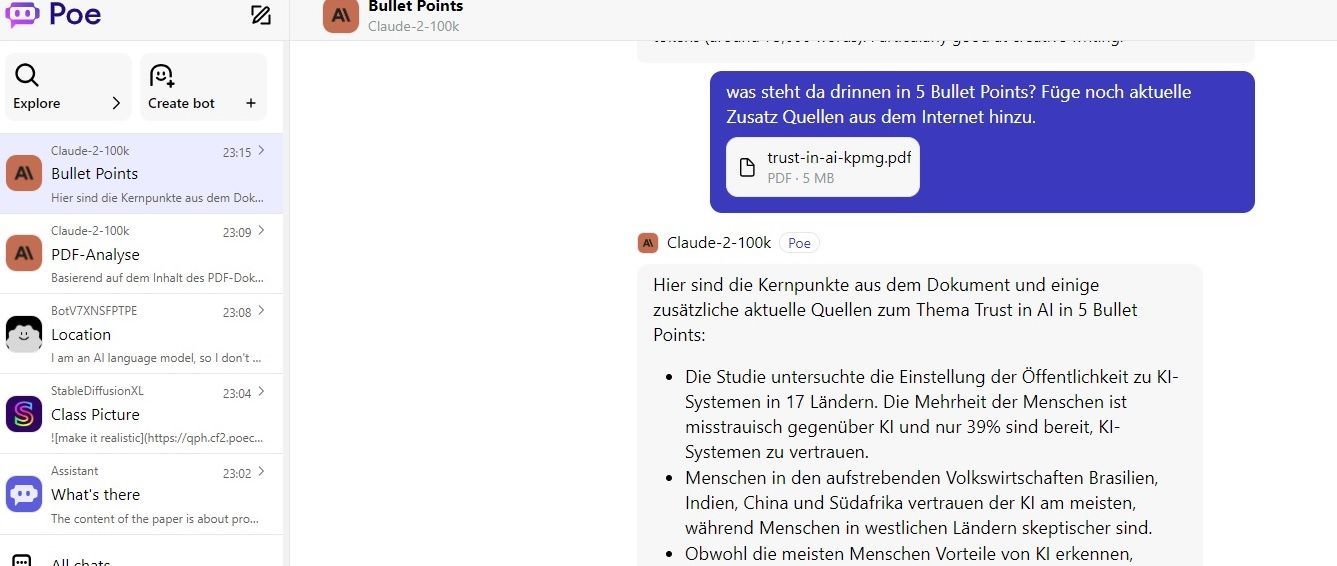

Poe by Quora is a platform offering several llm chatbots at a glance. Basically you can compare it to a "WeChat for llms". The great features of Poe is that you can test large language models such as Claude, GPT, Llama and other models in one single place. And: for free (to a certain extent). On top, you can create your own chatbots in no time (not as sophisticated as Writesonic, GPT-trainer, or Dante AI which are specific Generative AI chatbot Tools). You can basically try out the advantage of Claude, namely analyzing large amounts of data (so-called window context defined as the number of tokens the model can take as input when generating responses according to Hazyresearch). For example, in GPT-3 the context window size is 2K (2000) and in GPT-4 it is a larger 32K. There is a trend and demand for increasingly larger context window sizes in LLMs. Larger context windows improve LLM performance and their usefulness across various applications where GPT-models are less performant.

Claude is not available in Switzerland as of October 2023. So by using Poe you can overcome this limitation and try it out already. Unfortunately, Google Bard is not integrated to Quora's Poe yet.

Poe in Action: Analyze huge PDFs with Claude as it can process more context (data tokens sent to the llm)

OpenAI vs. Bard vs. Claude vs. Poe - Which Platform is the best?

When looking at the pros and cons of generative AI platforms we can see literally a race between the platforms where each tools launches again another great capability the other tool does not offer (e.g. context window, creativity, open-source availability, analysis, costs).

With the very recent activation of voice capabilities in the ChatGPT app - also for Switzerland and European countries - plus the enormous capability to analyze any kind of formats (excel, pdfs, CSV), we still see OpenAI's ChatGPT as the major leader when it comes to Gen. AI platforms.

Need support with your Generative Ai Strategy and Implementation?

🚀 AI Strategy, business and tech support

🚀 ChatGPT, Generative AI & Conversational AI (Chatbot)

🚀 Support with AI product development

🚀 AI Tools and Automation

Call: + 78 874 04 08

talk(at)voicetechhub.com

Etzbergstrasse 37, 8405 Winterthur

©VOOCE GmbH 2019 - 2024 - All rights reserved.