Special Offer valid until May 18: ChatGPT + General AI & Image Generation, only CHF 390.- instead of CHF 590.- Register today

How Does Perplexity AI Work?

What is Perplexity AI?

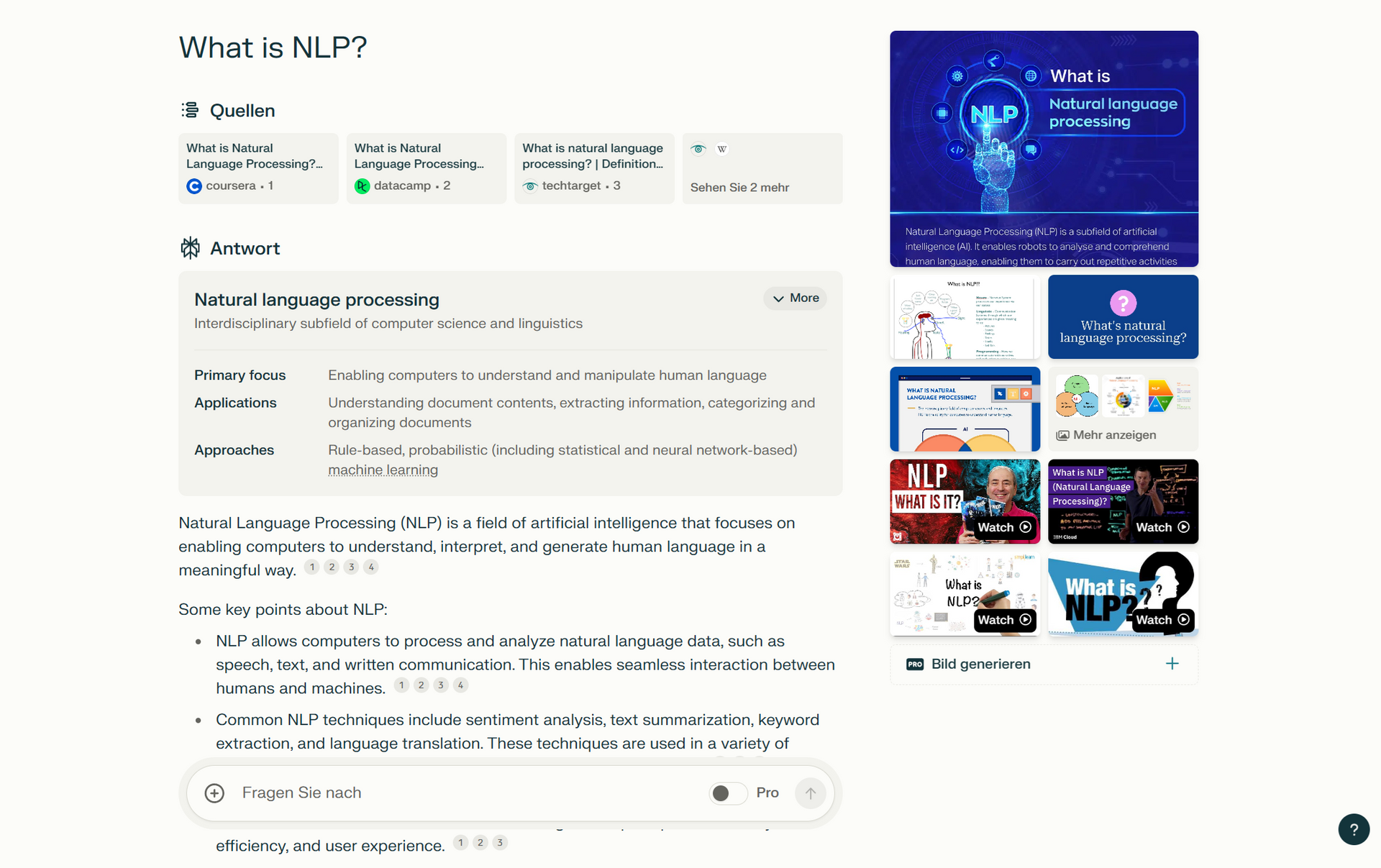

Perplexity AI is the "New Google" or let's say AI enabled search. It is an intelligent AI search application that helps users to receive answers when prompting with very precise citations compared to ChatGPT and other large language user interfaces.

How does Perplexity differ from Google?

In comparison, Google search results are retrieved through search engine mechanisms, employing indexing, rankings, and engagement rates of internet content, whereas Perplexity first searches for potential sources on the internet and then delivers optimal and precise answers using extensive language models and Natural Language Processing.

When to use Perplexity and when ChatGPT?

Perplexity is ideal if you want to research citations for your academic work or ensure the precision of your references. However, you can also engage in casual conversation with it, similar to ChatGPT. We highlight its user-friendly interface, which automatically integrates movie and picture sources.

Moreover, our experiments have shown that Perplexity performs notably better, particularly when seeking recent updates, as it utilizes real-time retrieval for accurate responses. This stands in contrast to ChatGPT, which is trained only up to a certain point (e.g., March 2023). Consequently, knowledge beyond March 2023 is unavailable when interacting with ChatGPT, whereas Perplexity provides current and relevant sources.

Both AI platforms offer a Pro version. This is necessary if you want to generate pictures or upload files with Perplexity or ChatGPT. With Perplexity we highlight the fact that you can choose between different LLMs (e.g, Claude, Sonar, Llama) which provides you the possibility to upload large files. Please find more in this article about the differences between Claude, Gemini, and other Large Language Models.

We support you with guidance when it comes to Generative AI challenges. Meet the Voicetechhub team today.

Need support with your Generative Ai Strategy and Implementation?

🚀 AI Strategy, business and tech support

🚀 ChatGPT, Generative AI & Conversational AI (Chatbot)

🚀 Support with AI product development

🚀 AI Tools and Automation

Call: + 78 874 04 08

talk(at)voicetechhub.com

Etzbergstrasse 37, 8405 Winterthur

©VOOCE GmbH 2019 - 2024 - All rights reserved.